Cloud images, qemu, cloud-init and snapd spread tests April 16, 2019

Posted by jdstrand in canonical, ubuntu, ubuntu-server, uncategorized.Tags: snapd

add a comment

It is useful for testing to want to work with official cloud images as local VMs. Eg, when I work on snapd, I like to have different images available to work with its spread tests.

The autopkgtest package makes working with Ubuntu images quite easy:

$ sudo apt-get install qemu-kvm autopkgtest

$ autopkgtest-buildvm-ubuntu-cloud -r bionic # -a i386

Downloading https://cloud-images.ubuntu.com/bionic/current/bionic-server-cloudimg-amd64.img...

# and to integrate into spread

$ mkdir -p ~/.spread/qemu

$ mv ./autopkgtest-bionic-amd64.img ~/.spread/qemu/ubuntu-18.04-64.img

# now can run any test from 'spread -list' starting with

# 'qemu:ubuntu-18.04-64:'

This post isn’t really about autopkgtest, snapd or spread specifically though….

I found myself wanting an official Debian unstable cloud image so I could use it in spread while testing snapd. I learned it is easy enough to create the images yourself but then I found that Debian started providing raw and qcow2 cloud images for use in OpenStack and so I started exploring how to use them and generalize how to use arbitrary cloud images.

General procedure

The basic steps are:

- obtain a cloud image

- make copy of the cloud image for safekeeping

- resize the copy

- create a seed.img with cloud-init to set the username/password

- boot with networking and the seed file

- login, update, etc

- cleanly shutdown

- use normally (ie, without seed file)

In this case, I grabbed the ‘debian-testing-openstack-amd64.qcow2’ image from http://cdimage.debian.org/cdimage/openstack/testing/ and verified it. Since this is based on Debian ‘testing’ (current stable images are also available), when I copied it I named it accordingly. Eg, I knew for spread it needed to be ‘debian-sid-64.img’ so I did:

$ cp ./debian-testing-openstack-amd64.qcow2 ./debian-sid-64.img

Or if downloaded a raw image, convert it to qcow2 instead of the cp like so:

$ qemu-img convert -f raw ./.img -O qcow2 ./.img

Then I resized it; I picked 20G since I recalled that is what autopkgtest uses:

$ qemu-img resize debian-sid-64.img 20G

These are already setup for cloud-init, so I created a cloud-init data file (note, the ‘#cloud-config’ comment at the top is important):

$ cat ./debian-data

#cloud-config

password: debian

chpasswd: { expire: false }

ssh_pwauth: true

and a cloud-init meta-data file:

$ cat ./debian-meta-data

instance-id: i-debian-sid-64

local-hostname: debian-sid-64

and fed that into cloud-localds to create a seed file:

$ cloud-localds -v ./debian-seed.img ./debian-data ./debian-meta-data

Then start the image with:

$ kvm -M pc -m 1024 -smp 1 -monitor pty -nographic -hda ./debian-sid-64.img -drive "file=./debian-seed.img,if=virtio,format=raw" -net nic -net user,hostfwd=tcp:127.0.0.1:59355-:22

(I’m using the invocation that is reminiscent of how spread invokes it; feel free to use a virtio invocation as described by Scott Moser if that better suits your environment.)

Here, the “59355” can be any unused high port. The idea is after the image boots, you can login with ssh using:

$ ssh -p 59355 debian@127.0.0.1

Once logged in, perform any updates, etc that you want in place when tests are run, then disable cloud-init for the next boot and cleanly shutdown with:

$ sudo touch /etc/cloud/cloud-init.disabled

$ sudo shutdown -h now

The above is the generalized procedure which can hopefully be adapted for other distros that provide cloud images, etc.

For integrating into spread, just copy the image to ‘~/.spread/qemu’, naming it how spread expects. spread will use ‘-snapshot’ with the VM as part of its tests, so if you want to update the images later since they might be out of date, omit the seed file (and optionally ‘-net nic -net user,hostfwd=tcp:127.0.0.1:59355-:22’ if you don’t need port forwarding), and use:

$ kvm -M pc -m 1024 -smp 1 -monitor pty -nographic -hda ./debian-sid-64.img

UPDATE 2019-04-23: the above is confirmed to work with Fedora 28, 29, 31 and 32 (though, if using the resulting image to test snapd, be sure to configure the password as ‘fedora’ and then be sure to ‘yum update ; yum install kernel-modules nc strace’ in the image).

UPDATE 2019-04-22: the above is confirmed to work with CentOS 7 and CentOS 8 (though, if using the resulting image to test snapd, be sure to configure the password as ‘centos’ and then be sure to ‘yum update ; yum install epel-release ; yum install golang nc strace’ in the image).

UPDATE 2020-02-16: https://wiki.debian.org/ContinuousIntegration/autopkgtest documents how to create autopkgtest images for Debian as ‘$ autopkgtest-build-qemu unstable autopkgtest-unstable.img’. This is confirmed to work on an Ubuntu 20.04 host but broken on an Ubuntu 18.04 host (seems to be https://bugs.debian.org/cgi-bin/bugreport.cgi?bug=922501).

UPDATE 2020-06-27: the above is confirmed to work with Amazon Linux 2. The user is ‘ec2-user’. Booting without the seed image has password auth disabled, and the boot is slow due to nfs being enabled and it requires gssproxy to start before it and gssproxy.service doesn’t start due to lack of randomness. To workaround, can login and do (need to investigate and fix correctly via cloud-init (or just remove nfs-utils)):

$ sudo -i sh -c 'echo PasswordAuthentication yes >> /etc/ssh/sshd_config'

$ sudo mkdir /etc/systemd/system/gssproxy.service.d

$ sudo sh -c 'sed "s/Before=.*/Before=/" /usr/lib/systemd/system/gssproxy.service > /etc/systemd/system/gssproxy.service.d/local.conf'

$ sudo systemctl daemon-reload

UPDATE 2020-07-21: the above is confirmed to work with OpenSUSE 15.2 and the Tumbleweed JeOS appliance image. Spread doesn’t yet have this setup for qemu, but the user is ‘opensuse’. Configure the password as ‘opensuse’ then be sure to ‘zypper update ; zypper install apparmor-abstractions apparmor-profiles apparmor-utils rpmbuild squashfs’ (with Tumbleweed JeOS, also install ‘kernel-default’ for squashfs kernel support).

Extra steps for Debian cloud images without default e1000 networking

Unfortunately, for the Debian cloud images, there were additional steps because spread doesn’t use virtio, but instead the default the e1000 driver, and the Debian cloud kernel doesn’t include this:

$ grep E1000 /boot/config-4.19.0-4-cloud-amd64

# CONFIG_E1000 is not set

# CONFIG_E1000E is not set

So… when the machine booted, there was no networking. To adjust for this, I blew away the image, copied from the safely kept downloaded image, resized then started it with:

$ kvm -M pc -m 1024 -smp 1 -monitor pty -nographic -hda $HOME/.spread/qemu/debian-sid-64.img -drive "file=$HOME/.spread/qemu/debian-seed.img,if=virtio,format=raw" -device virtio-net-pci,netdev=eth0 -netdev type=user,id=eth0

This allowed the VM to start with networking, at which point I adjusted /etc/apt/sources.list to refer to ‘sid’ instead of ‘buster’ then ran apt-get update then apt-get dist-upgrade to upgrade to sid. I then installed the Debian distro kernel with:

$ sudo apt-get install linux-image-amd64

Then uninstalled the currently running kernel with:

$ sudo apt-get remove --purge linux-image-cloud-amd64 linux-image-4.19.0-4-cloud-amd64

(I used ‘dpkg -l | grep linux-image’ to see the cloud kernels I wanted to remove). Removing the package that provides the currently running kernel is a dangerous operation for most systems, so there is a scary message to abort the operation. In our case, it isn’t so scary (we can just try again ;) and this is exactly what we want to do.

Next I cleanly shutdown the VM with:

$ sudo shutdown -h now

and try to start it again like with the ‘general procedures’, above (I’m keeping the seed file here because I want cloud-init to be re-run with the e1000 driver):

$ kvm -M pc -m 1024 -smp 1 -monitor pty -nographic -hda ./debian-sid-64.img -drive "file=./debian-seed.img,if=virtio,format=raw" -net nic -net user,hostfwd=tcp:127.0.0.1:59355-:22

Now I try to login via ssh:

$ ssh -p 59355 debian@127.0.0.1

...

debian@127.0.0.1's password:

...

Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent

permitted by applicable law.

Last login: Tue Apr 16 16:13:15 2019

debian@debian:~$ sudo touch /etc/cloud/cloud-init.disabled

debian@debian:~$ sudo shutdown -h now

Connection to 127.0.0.1 closed.

While this VM is no longer the official cloud image, it is still using the Debian distro kernel and Debian archive, which is good enough for my purposes and at this point I’m ready to use this VM in my testing (eg, for me, copy ‘debian-sid-64.img’ to ‘~/.spread/qemu’).

Monitoring your snaps for security updates February 1, 2019

Posted by jdstrand in canonical, security, ubuntu.add a comment

Some time ago we started alerting publishers when their stage-packages received a security update since the last time they built a snap. We wanted to create the right balance for the alerts and so the service currently will only alert you when there are new security updates against your stage-packages. In this manner, you can choose not to rebuild your snap (eg, since it doesn’t use the affected functionality of the vulnerable package) and not be nagged every day that you are out of date.

As nice as that is, sometimes you want to check these things yourself or perhaps hook the alerts into some form of automation or tool. While the review-tools had all of the pieces so you could do this, it wasn’t as straightforward as it could be. Now with the latest stable revision of the review-tools, this is easy:

$ sudo snap install review-tools

$ review-tools.check-notices \

~/snap/review-tools/common/review-tools_656.snap

{'review-tools': {'656': {'libapt-inst2.0': ['3863-1'],

'libapt-pkg5.0': ['3863-1'],

'libssl1.0.0': ['3840-1'],

'openssl': ['3840-1'],

'python3-lxml': ['3841-1']}}}

The review-tools are a strict mode snap and while it plugs the home interface, that is only for convenience, so I typically disconnect the interface and put things in its SNAP_USER_COMMON directory, like I did above.

Since now it is super easy to check a snap on disk, with a little scripting and a cron job, you can generate a machine readable report whenever you want. Eg, can do something like the following:

$ cat ~/bin/check-snaps

#!/bin/sh

set -e

snaps="review-tools/stable rsync-jdstrand/edge"

tmpdir=$(mktemp -d -p "$HOME/snap/review-tools/common")

cleanup() {

rm -fr "$tmpdir"

}

trap cleanup EXIT HUP INT QUIT TERM

cd "$tmpdir" || exit 1

for i in $snaps ; do

snap=$(echo "$i" | cut -d '/' -f 1)

channel=$(echo "$i" | cut -d '/' -f 2)

snap download "$snap" "--$channel" >/dev/null

done

cd - >/dev/null || exit 1

/snap/bin/review-tools.check-notices "$tmpdir"/*.snap

or if you already have the snaps on disk somewhere, just do:

$ /snap/bin/review-tools.check-notices /path/to/snaps/*.snap

Now can add the above to cron or some automation tool as a reminder of what needs updates. Enjoy!

NetworkManager, systemd-networkd and slow boots September 29, 2017

Posted by jdstrand in ubuntu.add a comment

This may be totally obvious to many but I thought I would point out a problem I had with slow boot and how I solved it to highlight a neat feature of systemd to investigate the problem.

I noticed that I was having terrible boot times, so I used the `systemd-analyze critical-chain` command to see what the problem was. This showed me that `network-online.target` was taking 2 minutes. Looking at the logs (and confirming with `systemd-analyze blame`), I found it was timing out because `systemd-networkd` failed to bring interfaces either online or to fail (I was in an area where I had not connected to the existing wifi before and the wireless interface was scanning instead of failing). I looked around and found that I had no configuration for systemd-networkd (/etc/systemd/network was empty) or for netplan (/etc/netplan was empty), so I simply ran `sudo systemctl disable systemd-networkd` (leaving NetworkManager enabled) and I then had a very fast boot.

I need to file a bug on the cause of the problem, but I found the `systemd-analyze` command so helpful, I wanted to share. :)

UPDATE: this bug was reported as https://launchpad.net/bugs/1714301 and fixed in systemd 234-2ubuntu11.

Easy ssh into libvirt VMs and LXD containers September 20, 2017

Posted by jdstrand in canonical, ubuntu, ubuntu-server.4 comments

Finding your VMs and containers via DNS resolution so you can ssh into them can be tricky. I was talking with Stéphane Graber today about this and he reminded me of his excellent article: Easily ssh to your containers and VMs on Ubuntu 12.04.

These days, libvirt has the `virsh dominfo` command and LXD has a slightly different way of finding the IP address.

Here is an updated `~/.ssh/config` that I’m now using (thank you Stéphane for the update for LXD. Note, the original post had an extra ‘%h’ for lxd which is corrected as of 2020/06/23):

Host *.lxd

#User ubuntu

#StrictHostKeyChecking no

#UserKnownHostsFile /dev/null

ProxyCommand nc $(lxc list -c s4 $(echo %h | sed "s/\.lxd//g") | grep RUNNING | cut -d' ' -f4) %p

Host *.vm

#StrictHostKeyChecking no

#UserKnownHostsFile /dev/null

ProxyCommand nc $(virsh domifaddr $(echo %h | sed "s/\.vm//g") | awk -F'[ /]+' '{if (NR>2 && $5) print $5}') %p

You may want to uncomment `StrictHostKeyChecking` and `UserKnownHostsFile` depending on your environment (see `man ssh_config`) for details.

With the above, I can ssh in with:

$ ssh foo.vm uptime

16:37:26 up 50 min, 0 users, load average: 0.00, 0.00, 0.00

$ ssh bar.lxd uptime

21:37:35 up 12:39, 2 users, load average: 0.55, 0.73, 0.66

Enjoy!

Snappy app trust model January 30, 2015

Posted by jdstrand in canonical, security, ubuntu, ubuntu-server, uncategorized.add a comment

Most of this has been discussed on mailing lists, blog entries, etc, while developing Ubuntu Touch, but I wanted to write up something that ties together these conversations for Snappy. This will provide background for the conversations surrounding hardware access for snaps that will be happening soon on the snappy-devel mailing list.

Background

Ubuntu Touch has several goals that all apply to Snappy:

- we want system-image upgrades

- we want to replace the distro archive model with an app store model for Snappy systems

- we want developers to be able to get their apps to users quickly

- we want a dependable application lifecycle

- we want the system to be easy to understand and to develop on

- we want the system to be secure

- we want an app trust model where users are in control and express that control in tasteful, easy to understand ways

Snappy adds a few things to the above (that pertain to this conversation):

- we want the system to be bulletproof (transactional updates with rollbacks)

- we want the system to be easy to use for system builders

- we want the system to be easy to use and understand for admins

Let’s look at what all these mean more closely.

system-image upgrades

- we want system-image upgrades

- we want the system to be bulletproof (transactional updates with rollbacks)

We want system-image upgrades so updates are fast, reliable and so people (users, admins, snappy developers, system builders, etc) always know what they have and can depend on it being there. In addition, if an upgrade goes bad, we want a mechanism to be able to rollback the system to a known good state. In order to achieve this, apps need to work within the system and live in their own area and not modify the system in unpredictable ways. The Snappy FHS is designed for this and the security policy enforces that apps follow it. This protects us from malware, sure, but at least as importantly, it protects us from programming errors and well-intentioned clever people who might accidentally break the Snappy promise.

app store

- we want to replace the distro archive model with an app store model

- we want developers to be able to get their apps to users quickly

Ubuntu is a fantastic distribution and we have a wonderfully rich archive of software that is refreshed on a cadence. However, the traditional distro model has a number of drawbacks and arguably the most important one is that software developers have an extremely high barrier to overcome to get their software into users hands on their own time-frame. The app store model greatly helps developers and users desiring new software because it gives developers the freedom and ability to get their software out there quickly and easily, which is why Ubuntu Touch is doing this now.

In order to enable developers in the Ubuntu app store, we’ve developed a system where a developer can upload software and have it available to users in seconds with no human review, intervention or snags. We also want users to be able to trust what’s in Ubuntu’s store, so we’ve created store policies that understand the Ubuntu snappy system such that apps do not require any manual review so long as the developer follows the rules. However, the Ubuntu Core system itself is completely flexible– people can install apps that are tightly confined, loosely confined, unconfined, whatever (more on this, below). In this manner, people can develop snaps for their own needs and distribute them however they want.

It is the Ubuntu store policy that dictates what is in the store. The existing store policy is in place to improve the situation and is based on our experiences with the traditional distro model and attempts to build something app store-like experiences on top of it (eg, MyApps).

application lifecycle

- dependable application lifecycle

This has not been discussed as much with Snappy for Ubuntu Core, but Touch needs to have a good application lifecycle model such that apps cannot run unconstrained and unpredictably in the background. In other words, we want to avoid problems with battery drain and slow systems on Touch. I think we’ve done a good job so far on Touch, and this story is continuing to evolve.

(I mention application lifecycle in this conversation for completeness and because application lifecycle and security work together via the app’s application id)

security

- we want the system to be secure

- we want an app trust model where users are in control and express that control in tasteful, easy to understand ways

Everyone wants a system that they trust and that is secure, and security is one of the core tenants of Snappy systems. For Ubuntu Touch, we’ve created a

system that is secure, that is easy to use and understand by users, and that still honors relevant, meaningful Linux traditions. For Snappy, we’ll be adding several additional security features (eg, seccomp, controlled abstract socket communication, firewalling, etc).

Our security story and app store policies give us something that is between Apple and Google. We have a strong security story that has a number of similarities to Apple, but a lightweight store policy akin to Google Play. In addition to that, our trust model is that apps not needing manual review are untrusted by the OS and have limited access to the system. On Touch we use tasteful, contextual prompting so the user may trust the apps to do things beyond what the OS allows on its own (simple example, app needs access to location, user is prompted at the time of use if the app can access it, user answers and the decision is remembered next time).

Snappy for Ubuntu Core is different not only because the UI supports a CLI, but also because we’ve defined a Snappy for Ubuntu Core user that is able to run the ‘snappy’ command as someone who is an admin, a system builder, a developer and/or someone otherwise knowledgeable enough to make a more informed trust decision. (This will come up again later, below)

easy to use

- we want the system to be easy to understand and to develop on

- we want the system to be easy to use for system builders

- we want the system to be easy to use and understand for admins

We want a system that is easy to use and understand. It is key that developers are able to develop on it, system builders able to get their work done and admins can install and use the apps from the store.

For Ubuntu Touch, we’ve made a system that is easy to understand and to develop on with a simple declarative permissions model. We’ll refine that for Snappy and make it easy to develop on too. Remember, the security policy is there not just so we can be ‘super secure’ but because it is what gives us the assurances needed for system upgrades, a safe app store and an altogether bulletproof system.

As mentioned, the system we have designed is super flexible. Specifically, the underlying system supports:

- apps working wholly within the security policy (aka, ‘common’ security policy groups and templates)

- apps declaring specific exceptions to the security policy

- apps declaring to use restricted security policy

- apps declaring to run (effectively) unconfined

- apps shipping hand-crafted policy (that can be strict or lenient)

(Keep in mind the Ubuntu App Store policy will auto-accept apps falling under ‘1’ and trigger manual review for the others)

The above all works today (though it isn’t always friendly– we’re working on that) and the developer is in control. As such, Snappy developers have a plethora of options and can create snaps with security policy for their needs. When the developer wants to ship the app and make it available to all Snappy users via the Ubuntu App Store, then the developer may choose to work within the system to have automated reviews or choose not to and manage the process via manual reviews/commercial relationship with Canonical.

Moving forward

The above works really well for Ubuntu Touch, but today there is too much friction with regard to hardware access. We will make this experience better without compromising on any of our goals. How do we put this all together, today, so people can get stuff done with snappy without sacrificing on our goals, making it harder on ourselves in the future or otherwise opening Pandora’s box? We don’t want to relax our security policy, because we can’t make the bulletproof assurances we are striving for and it would be hard to tighten the security. We could also add some temporary security policy that adds only certain accesses (eg, serial devices) but, while useful, this is too inflexible. We also don’t want to have apps declare the accesses themselves to automatically adds the necessary security policy, because this (potentially) privileged access is then hidden from the Snappy for Ubuntu Core user.

The answer is simple when we remember that the Snappy for Ubuntu Core user (ie, the one who is able to run the snappy command) is knowledgeable enough to make the trust decision for giving an app access to hardware. In other words, let the admin/developer/system builder be in control.

immediate term

The first thing we are going to do is unblock people and adjust snappy to give the snappy core user the ability to add specific device access to snap-specific security policy. In essence you’ll install a snap, then run a command to give the snap access to a particular device, then you’re done. This simple feature will unblock developers and snappy users immediately while still supporting our trust-model and goals fully. Plus it will be worth implementing since we will likely always want to support this for maximum flexibility and portability (since people can use traditional Linux APIs).

The user experience for this will be discussed and refined on the mailing list in the coming days.

short term

After that, we’ll build on this and explore ways to make the developer and user experience better through integration with the OEM part and ways of interacting with the underlying system so that the user doesn’t have to necessarily know the device name to add, but can instead be given smart choices (this can have tie-ins to the web interface for snappy too). We’ll want to be thinking about hotpluggable devices as well.

Since this all builds on the concept of the immediate term solution, it also supports our trust-model and goals fully and is relatively easy to implement.

future

Once we have the above in place, we should have a reasonable experience for snaps needing traditional device access. This will give us time to evaluate how people are accessing hardware and see if we can make things even better by using frameworks and/or a hardware abstraction layer. In this manner, snaps can program to an easy to use API and the system can mediate access to the underlying hardware via that API.

Snappy security December 10, 2014

Posted by jdstrand in canonical, security, ubuntu, ubuntu-server.add a comment

Ubuntu Core with Snappy was recently announced and a key ingredient for snappy is security. Snappy applications are confined by AppArmor and the confinement story for snappy is an evolution of the security model for Ubuntu Touch. The basic concepts for confined applications and the AppStore model pertain to snappy applications as well. In short, snappy applications are confined using AppArmor by default and this is achieved through an easy to understand, use and developer-friendly system. Read the snappy security specification for all the nitty gritty details.

A developer doc will be published soon.

Application isolation with AppArmor – part IV June 6, 2014

Posted by jdstrand in canonical, security, ubuntu, ubuntu-server.1 comment so far

Last time I discussed AppArmor, I talked about new features in Ubuntu 13.10 and a bit about ApplicationConfinement for Ubuntu Touch. With the release of Ubuntu 14.04 LTS, several improvements were made:

- Mediation of signals

- Mediation of ptrace

- Various policy updates for 14.04, including new tunables, better support for XDG user directories, and Unity7 abstractions

- Parser policy compilation performance improvements

- Google Summer of Code (SUSE sponsored) python rewrite of the userspace tools

Signal and ptrace mediation

Prior to Ubuntu 14.04 LTS, a confined process could send signals to other processes (subject to DAC) and ptrace other processes (subject to DAC and YAMA). AppArmor on 14.04 LTS adds mediation of both signals and ptrace which brings important security improvements for all AppArmor confined applications, such as those in the Ubuntu AppStore and qemu/kvm machines as managed by libvirt and OpenStack.

When developing policy for signal and ptrace rules, it is important to remember that AppArmor does a cross check such that AppArmor verifies that:

- the process sending the signal/performing the ptrace is allowed to send the signal to/ptrace the target process

- the target process receiving the signal/being ptraced is allowed to receive the signal from/be ptraced by the sender process

Signal(7) permissions use the ‘signal’ rule with the ‘receive/send’ permissions governing signals. PTrace permissions use the ‘ptrace’ rule with the ‘trace/tracedby’ permissions governing ptrace(2) and the ‘read/readby’ permissions governing certain proc(5) filesystem accesses, kcmp(2), futexes (get_robust_list(2)) and perf trace events.

Consider the following denial:

Jun 6 21:39:09 localhost kernel: [221158.831933] type=1400 audit(1402083549.185:782): apparmor="DENIED" operation="ptrace" profile="foo" pid=29142 comm="cat" requested_mask="read" denied_mask="read" peer="unconfined"

This demonstrates that the ‘cat’ binary running under the ‘foo’ profile was unable to read the contents of a /proc entry (in my test, /proc/11300/environ). To allow this process to read /proc entries for unconfined processes, the following rule can be used:

ptrace (read) peer=unconfined,

If the receiving process was confined, the log entry would say ‘peer=”<profile name>”‘ and you would adjust the ‘peer=unconfined’ in the rule to match that in the log denial. In this case, because unconfined processes implicitly can be readby all other processes, we don’t need to specify the cross check rule. If the target process was confined, the profile for the target process would need a rule like this:

ptrace (readby) peer=foo,

Likewise for signal rules, consider this denial:

Jun 6 21:53:15 localhost kernel: [222005.216619] type=1400 audit(1402084395.937:897): apparmor="DENIED" operation="signal" profile="foo" pid=29069 comm="bash" requested_mask="send" denied_mask="send" signal=term peer="unconfined"

This shows that ‘bash’ running under the ‘foo’ profile tried to send the ‘term’ signal to an unconfined process (in my test, I used ‘kill 11300’) and was blocked. Signal rules use ‘read’ and ‘send to determine access, so we can add a rule like so to allow sending of the signal:

signal (send) set=("term") peer=unconfined,

Like with ptrace, a cross-check is performed with signal rules but implicit rules allow unconfined processes to send and receive signals. If pid 11300 were confined, you would adjust the ‘peer=’ in the rule of the foo profile to match the denial in the log, and then adjust the target profile to have something like:

signal (receive) set=("term") peer=foo,

Signal and ptrace rules are very flexible and the AppArmor base abstraction in Ubuntu 14.04 LTS has several rules to help make profiling and transitioning to the new mediation easier:

# Allow other processes to read our /proc entries, futexes, perf tracing and

# kcmp for now

ptrace (readby),

# Allow other processes to trace us by default (they will need

# 'trace' in the first place). Administrators can override

# with:

# deny ptrace (tracedby) ...

ptrace (tracedby),

# Allow unconfined processes to send us signals by default

signal (receive) peer=unconfined,

# Allow us to signal ourselves

signal peer=@{profile_name},

# Checking for PID existence is quite common so add it by default for now

signal (receive, send) set=("exists"),

Note the above uses the new ‘@{profile_name}’ AppArmor variable, which is particularly handy with ptrace and signal rules. See man 5 apparmor.d for more details and examples.

14.10

Work still remains and some of the things we’d like to do for 14.10 include:

- Finishing mediation for non-networking forms of IPC (eg, abstract sockets). This will be done in time for the phone release.

- Have services integrate with AppArmor and the upcoming trust-store to become trusted helpers (also for phone release)

- Continue work on netowrking IPC (for 15.04)

- Continue to work with the upstream kernel on kdbus

- Work continued on LXC stacking and we hope to have stacked profiles within the current namespace for 14.10. Full support for stacked profiles where different host and container policy for the same binary at the same time should be ready by 15.04

- Various fixes to the python userspace tools for remaining bugs. These will also be backported to 14.04 LTS

Until next time, enjoy!

Application isolation with AppArmor – part III October 18, 2013

Posted by jdstrand in canonical, security, ubuntu.add a comment

Last time I discussed AppArmor, I gave an overview of how AppArmor is used in Ubuntu. With the release of Ubuntu 13.10, a number of features have been added:

- Support for fine-grained DBus mediation for bus, binding name, object path, interface and member/method

- The return of named AF_UNIX socket mediation

- Integration with several services as part of the ApplicationConfinement work in support of click packages and the Ubuntu appstore

- Better support for policy generation via the aa-easyprof tool and apparmor-easyprof-ubuntu policy

- Native AppArmor support in Upstart

DBus mediation

Prior to Ubuntu 13.10, access to the DBus system bus was on/off and there was no mediation of the session bus or any other DBus buses, such as the accessibility bus. 13.10 introduces fine-grained DBus mediation. In a nutshell, you define ‘dbus’ rules in your AppArmor policy just like any other rules. When an application that is confined by AppArmor uses DBus, the dbus-daemon queries the kernel on if the application is allowed to perform this action. If it is, DBus proceeds normally, if not, DBus denies the access and logs it to syslog. An example denial is:

Oct 18 16:02:50 localhost dbus[3626]: apparmor="DENIED" operation="dbus_method_call" bus="session" path="/ca/desrt/dconf/Writer/user" interface="ca.desrt.dconf.Writer" member="Change" mask="send" name="ca.desrt.dconf" pid=30538 profile="/usr/lib/firefox/firefox{,*[^s][^h]}" peer_pid=3927 peer_profile="unconfined"

We can see that firefox tried to access gsettings (dconf) but was denied.

DBus rules are a bit more involved than most other AppArmor rules, but they are still quite readable and understandable. For example, consider the following rule:

dbus (send)

bus=session

path=/org/freedesktop/DBus

interface=org.freedesktop.DBus

member=Hello

peer=(name=org.freedesktop.DBus),

This rule says that the application is allowed to use the ‘Hello’ method on the ‘org.freedesktop.DBus’ interface of the ‘/org/freedesktop/DBus’ object for the process bound to the ‘org.freedesktop.DBus’ name on the ‘session’ bus. That is fine-grained indeed!

However, rules don’t have to be that fine-grained. For example, all of the following are valid rules:

dbus,

dbus bus=accessibility,

dbus (send) bus=session peer=(name=org.a11y.Bus),

Couple of things to keep in mind:

- Because dbus-daemon is the one performing the mediation, DBus denials are logged to syslog and not kern.log. Recent versions of Ubuntu log kernel messages to /var/log/syslog, so I’ve gotten in the habit of just looking there for everything

- The message content of DBus traffic is not examined

- The userspace tools don’t understand DBus rules yet. That means aa-genprof, aa-logprof and aa-notify don’t work with these new rules. The userspace tools are being rewritten and support will be added in a future release.

- The less fine-grained the rule, the more access is permitted. So ‘dbus,’ allows unrestricted access to DBus.

- Responses to messages are implicitly allowed, so if you allow an application to send a message to a service, the service is allowed to respond without needing a corresponding rule.

- dbus-daemon is considered a trusted helper (it integrates with AppArmor to enforce the mediation) and is not confined by default.

As a transitional step, existing policy for packages in the Ubuntu archive that use DBus will continue to have full access to DBus, but future Ubuntu releases may provide fine-grained DBus rules for this software. See ‘man 5 apparmor.d’ for more information on DBus mediation and AppArmor.

Application confinement

Ubuntu will support an app store model where software that has not gone through the traditional Ubuntu archive process is made available to users. While this greatly expands the quantity of quality software available to Ubuntu users, it also introduces new security risks. An important part of addressing these risks is to run applications under confinement. In this manner, apps are isolated from each other and are limited in what they can do on the system. AppArmor is at the heart of the Ubuntu ApplicationConfinement story and is already working on Ubuntu 13.10 for phones in the appstore. A nice introduction for developers on what the Ubuntu trust model is and how apps work within it can be found at http://developer.ubuntu.com.

In essence, a developer will design software with the Ubuntu SDK, then declare what type of application it is (which determines the AppArmor template to use), then declares any addition policy groups that the app needs. The templates and policy groups define AppArmor file, network, DBus and anything other rules that are needed. The software is packaged as a lightwight click package and when it is installed, an AppArmor click hook is run which creates a versioned profile for the application based on the templates and policy groups. On Unity 8, application lifecycle makes sure that the app is launched under confinement via an upstart job. For other desktop environments, a desktop file is generated in ~/.local/share/applications that prepends ‘aa-exec-click’ to the Exec line. The upstart job and ‘aa-exec-click’ not only launch the app under confinement, but also setup the environment (eg, set TMPDIR to an application specific directory). Various APIs have been implemented so apps can access files (eg, Pictures via the gallery app), connect to services (eg, location and online accounts) and work within Unity (eg, the HUD) safely and in a controlled and isolated manner.

The work is not done of course and serveral important features need to be implemented and bugs fixed, but application confinement has already added a very significant security improvement on Ubuntu 13.10 for phones.

14.04

As mentioned, work remains. Some of the things we’d like to do for 14.04 include:

- Finishing IPC mediation for things like signals, networking and abstract sockets

- Work on APIs and AppArmor integration of services to work better on the converged device (ie, with traditional desktop applications)

- Work with the upstream kernel on kdbus so we are ready for when that is available

- Finish the LXC stacking work to allow different host and container policy for the same binary at the same time

- While Mir already handles keyboard and mouse sniffing, we’d like to integrate with Mir in other ways where applicable (note, X mediation for keyboard/mouse sniffing, clipboard, screen grabs, drag and drop, and xsettings is not currently scheduled nor is wayland support. Both are things we’d like to have though, so if you’d like to help out here, join us on #apparmor on OFTC to discuss how to contribute)

Until next time, enjoy!

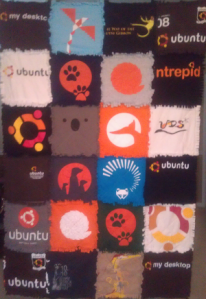

So many T-shirts, too little time… March 30, 2013

Posted by jdstrand in canonical, ubuntu.5 comments

I’ve been involved with Ubuntu for quite a while now, and part of my journey has included accumulating a bunch of Ubuntu T-shirts. I now have so many that I can’t possibly wear them all, so my wife gave me a really cool gift today: a quilt made out of a bunch of my old Ubuntu T-shirts! :) Check it out:

Fun *and* cozy!!

Application isolation with AppArmor – part II February 8, 2013

Posted by jdstrand in canonical, security, ubuntu.add a comment

Last time I discussed AppArmor, gave some background and some information on how it can be used. This time I’d like to discuss how AppArmor is used within Ubuntu. The information in this part applies to Ubuntu 12.10 and later, and unless otherwise noted, Ubuntu 12.04 LTS (and because we also push our changes into Debian, much of this will apply to Debian as well).

/etc/apparmor.d

- Ubuntu follows the upstream policy layout and naming conventions and uses the abstractions extensively. A few abstractions are Ubuntu-specific and they are prefixed with ‘ubuntu’. Eg /etc/apparmor.d/abstractions/ubuntu-browsers.

- Ubuntu encourages the use of the include files in /etc/apparmor.d/local in any shipped profiles. This allows administrators to make profile additions and apply overrides without having to change the shipped profile (will need to reload the profile with apparmor_parser, see /etc/apparmor.d/local/README for more information)

- All profiles in Ubuntu use ‘#include <tunables/global>’, which pulls in a number of tunables: ‘@{PROC}’ from ‘tunables/proc’, ‘@{HOME}’ and ‘@{HOMEDIRS}’ from ‘tunables/home’ and @{multiarch} from ‘tunables/multiarch’

- In addition to ‘tunables/home’, Ubuntu utilizes the ‘tunables/home.d/ubuntu’ file so that ‘@{HOMEDIRS}’ is preseedable at installation time, or adjustable via ‘sudo dpkg-reconfigure apparmor’

- Binary caches in /etc/apparmor.d/cache are used to speed up boot time.

- Ubuntu uses the /etc/apparmor.d/disable and /etc/apparmor.d/force-complain directories. Touching (or symlinking) a file with the same name as the profile in one of these directories will cause AppArmor to either skip policy load (eg disable/usr.sbin.rsyslogd) or load in complain mode (force-complain/usr.bin.firefox)

Boot

Policy load happens in 2 stages during boot:

- within the job of a package with an Upstart job file

- via the SysV initscript (/etc/init.d/apparmor)

For packages with an Upstart job and an AppArmor profile (eg, cups), the job file must load the AppArmor policy prior to execution of the confined binary. As a convenience, the /lib/init/apparmor-profile-load helper is provided to simplify Upstart integration. For packages that ship policy but do not have a job file (eg, evince), policy must be loaded sometime before application launch, which is why stage 2 is needed. Stage 2 will (re)load all policy. Binary caches are used in both stages unless it is determined that policy must be recompiled (eg, booting a new kernel).

dhclient is a corner case because it needs to have its policy loaded very early in the boot process (ie, before any interfaces are brought up). To accommodate this, the /etc/init/network-interface-security.conf upstart job file is used.

For more information on why this implementation is used, please see the upstart mailing list.

Packaging

AppArmor profiles are shipped as Debian conffiles (ie, the package manager treats them specially during upgrades). AppArmor profiles in Ubuntu should use an include directive to include a file from /etc/apparmor.d/local so that local site changes can be made without modifying the shipped profile. When a package ships an AppArmor profile, it is added to /etc/apparmor.d then individually loaded into the kernel (with caching enabled). On package removal, any symlinks/files in /etc/apparmor.d/disable, /etc/apparmor.d/force-complain and /etc/apparmor.d are cleaned up. Developers can use dh_apparmor to aid in shipping profiles with their packages.

Profiles and applications

Ubuntu ships a number of AppArmor profiles. The philosophy behind AppArmor profiles on Ubuntu is that the profile should add a meaningful security benefit while at the same time not introduce regressions in default or common functionality. Because it is all too easy for a security mechanism to be turned off completely in order to get work done, Ubuntu won’t ship overly restrictive profiles. If we can provide a meaningful security benefit with an AppArmor profile in the default install while still maintaining functionality for the vast majority of users, we may ship an enforcing profile. Unfortunately, because applications are not designed to run under confinement or are designed to do many things, it can be difficult to confine these applications while still maintaining usability. Sometimes we will ship disabled-by-default profiles so that people can opt into them if desired. Usually profiles are shipped in the package that provides the confined binary (eg, tcpdump ships its own AppArmor profile). Some in progress profiles are also offered in the apparmor-profiles package and are in complain mode by default. When filing AppArmor bugs in Ubuntu, it is best to file the bug against the application that ships the profile.

In addition to shipped profiles, some applications have AppArmor integration built in or have AppArmor confinement applied in a non-standard way.

Libvirt

An AppArmor sVirt driver is provided and enabled by default for libvirt managed QEMU virtual machines. This provides strong guest isolation and userspace host protection for virtual machines. AppArmor profiles are dynamically generated in /etc/apparmor.d/libvirt and usually you won’t have to worry about AppArmor confinement. If needed, profiles for the individual machines can be adjusted in /etc/apparmor.d/libvirt/libvirt-<uuid> or in all virtual machines in /etc/apparmor.d/abstractions/libvirt-qemu. See /usr/share/doc/libvirt-bin/README.Debian.gz for details.

LXC

LXC in Ubuntu uses AppArmor to help make sure files in the container cannot access security-sensitive files on the host. lxc-start is confined by its own restricted profile which allows mounting in the container’s tree and, just before executing the container’s init, transitioning to the container’s own profile. See LXC in precise and beyond for details. Note, currently the libvirt-lxc sVirt driver does not have AppArmor support (confusingly, libvirt-lxc is a different project than LXC, but there are longer term plans to integrate the two and/or add AppArmor support to libvirt-lxc).

Firefox

Ubuntu ships a disabled-by-default profile for Firefox. While it is known to work well in the default Ubuntu installation, Firefox, like all browsers, is a very complex piece of software that can do much more than simply surf web pages so enabling the profile in the default install could affect the overall usability of Firefox in Ubuntu. The goals of the profile are to provide a good usability experience with strong additional protection. The profile allows for the use of plugins and extensions, various helper applications, and access to files in the user’s HOME directory, removable media and network filesystems. The profile prevents execution of arbitrary code, malware, reading and writing to sensitive files such as ssh and gpg keys, and writing to files in the user’s default PATH. It also prevents reading of system and kernel files. All of this provides a level of protection exceeding that of normal UNIX permissions. Additionally, the profile’s use of includes allows for great flexibility for tightly confining firefox. /etc/apparmor.d/usr.bin.firefox is a very restricted profile, but includes both /etc/apparmor.d/local/usr.bin.firefox and /etc/apparmor.d/abstractions/ubuntu-browsers.d/firefox. /etc/apparmor.d/abstractions/ubuntu-browsers.d/firefox contains other include files for tasks such as multimedia, productivity, etc and the file can be manipulated via the aa-update-browser command to add or remove functionality as needed.

Chromium

A complain-mode profile for chromium-browser is available in the apparmor-profiles package. It uses the same methodology as the Firefox profile (see above) with a strict base profile including /etc/apparmor.d/abstractions/ubuntu-browsers.d/chromium-browser whichcan then include any number of additional abstractions (also configurable via aa-update-browser).

Lightdm guest session

The guest session in Ubuntu is protected via AppArmor. When selecting the guest session, Lightdm will transition to a restrictive profile that disallows access to others’ files.

libapache2-mod-apparmor

The libapache2-mod-apparmor package ships a disabled-by-default profile in /etc/apparmor.d/usr.lib.apache2.mpm-prefork.apache2. This profile provides a permissive profile for Apache itself but allows the administrator to add confinement policy for individual web applications as desired via AppArmor’s change_hat() mechanism (note, apache2-mpm-prefork must be used) and an example profile for phpsysinfo is provided in the apparmor-profiles package. For more information on how to use AppArmor with Apache, please see /etc/apparmor.d/usr.lib.apache2.mpm-prefork.apache2 as well as the upstream documentation.

PAM

AppArmor also has a PAM module which allows great flexibility in setting up policies for different users. The idea behind pam_apparmor is simple: when someone uses a confined binary (such as login, su, sshd, etc), that binary will transition to an AppArmor role via PAM. Eg, if ‘su’ is configured for use with pam_apparmor, when a user invokes ‘su’, PAM is consulted and when the PAM session is started, pam_apparmor will change_hat() to a hat that matches the username, the primary group, or the DEFAULT hat (configurable). These hats (typically) provide rudimentary policy which declares the transition to a role profile when the user’s shell is started. The upstream documentation has an example of how to put this all together for ‘su’, unconfined admin users, a tightly confined user, and somewhat confined default users.

aa-notify

aa-notify is a very simple program that can alert you when there are denials on the system. aa-notify will report any new AppArmor denials by consulting /var/log/kern.log (or /var/log/audit/audit.log if auditd is installed). For desktop users who install apparmor-notify, aa-notify is started on session start via /etc/X11/Xsession.d/90apparmor-notify and will watch the logs for any new denials and report them via notify-osd. Server users can add something like ‘/usr/bin/aa-notify -s 1 -v’ to their shell startup files (eg, ~/.profile) to show any AppArmor denials within the last day whenever they login. See ‘man 8 aa-notify’ for details.

Current limitations

For all AppArmor can currently do and all the places it is used in Ubuntu, there are limitations in AppArmor 2.8 and lower (ie, what is in Ubuntu and other distributions). Right now, it is great for servers, network daemons and tools/utilities that process untrusted input. While it can provide a security benefit to client applications, there are currently a number of gaps in this area:

- Access to the DBus system bus is on/off and there is no mediation of the session bus or any other buses that rely on Unix abstract sockets, such as the accessibility bus

- AppArmor does not provide environment filtering beyond having the ability to clear the very limited set of glibc secure-exec variables

- Display management mediation is not present (eg, it doesn’t protect against X snooping)

- Networking mediation is too coarse-grained (eg you can allow ‘tcp’ but cannot restrict the binding port, integrate with a firewall or utilize secmark)

- LXC/containers support is functional, but not complete (eg, it doesn’t allow different host and container policy for the same binary at the same time)

- AppArmor doesn’t currently integrate with other client technologies that might be useful (eg gnome-keyring, signon/gnome-online-accounts and gsettings) and there is no facility to dynamically update a profile via a user prompt like a file/open dialog

Next time I’ll discuss ongoing and future work to address these limitations.